Neural Autoregressive Distribution Estimation

Recent advances in neural autoregressive generative modeling has lead to impressive results at modeling images and audio, as well as language modeling and machine translation. This post looks at a slightly older take on neural autoregressive models - the Neural Autoregressive Distribution Estimator (NADE) family of models. An autoregressive model is based on the fact that any D-dimensional distribution can be factored into a product of conditional distributions in any order:A fully visible sigmoid belief network. Figure taken from the NADE paper.

Here we have binary inputsNeural Autoregressive Distribution Estimator. Figure taken from the NADE paper.

Specifically, each conditional is parameterised as:Modeling documents with NADE

In the standard NADE model, the input and outputs are binary variables. In order to work with sequences of text, the DocNADE model extends NADE by considering each element in the input sequence to be a multinomial observation - or in other words one of a predefined set of tokens (from a fixed vocabulary). Likewise, the output must now also be multinomial, and so a softmax layer is used at the output instead of a sigmoid. The DocNADE conditionals are then given by:An Overview of the TensorFlow code

The full source code for our TensorFlow implementation of DocNADE is available on Github, here we will just highlight some of the more interesting parts. First we do an embedding lookup for each word in our input sequence (x). We initialise the embeddings to be uniform in the range [0, 1.0 / (vocab_size * hidden_size)], which is taken from the original DocNADE source code. I don’t think that this is mentioned anywhere else, but we did notice a slight performance bump when using this instead of the default TensorFlow initialisation.

with tf.device('/cpu:0'):

max_embed_init = 1.0 / (params.vocab_size * params.hidden_size)

W = tf.get_variable(

'embedding',

[params.vocab_size, params.hidden_size],

initializer=tf.random_uniform_initializer(maxval=max_embed_init)

)

self.embeddings = tf.nn.embedding_lookup(W, x)

tf.scan function to apply sum_embeddings to each sequence element in turn. This replaces each embedding with sum of that embedding and the previously summed embeddings.

def sum_embeddings(previous, current):

return previous + current

h = tf.scan(sum_embeddings, tf.transpose(self.embeddings, [1, 2, 0]))

h = tf.transpose(h, [2, 0, 1])

h = tf.concat([

tf.zeros([batch_size, 1, params.hidden_size], dtype=tf.float32), h

], axis=1)

h = h[:, :-1, :]

bias = tf.get_variable(

'bias',

[params.hidden_size],

initializer=tf.constant_initializer(0)

)

h = tf.tanh(h + bias)

h = tf.reshape(h, [-1, params.hidden_size])

logits = linear(h, params.vocab_size, 'softmax')

loss = masked_sequence_cross_entropy_loss(x, seq_lengths, logits)

Experiments

As DocNADE computes the probability of the input sequence, we can measure how well it is able to generalise by computing the probability of a held-out test set. In the paper the actual metric that they use is the average perplexity per word, which for time| Model/paper | Perplexity |

| DocNADE (original) | 896 |

| Neural Variational Inference | 836 |

| DeepDocNADE | 835 |

| DocNADE with full softmax | 579 |

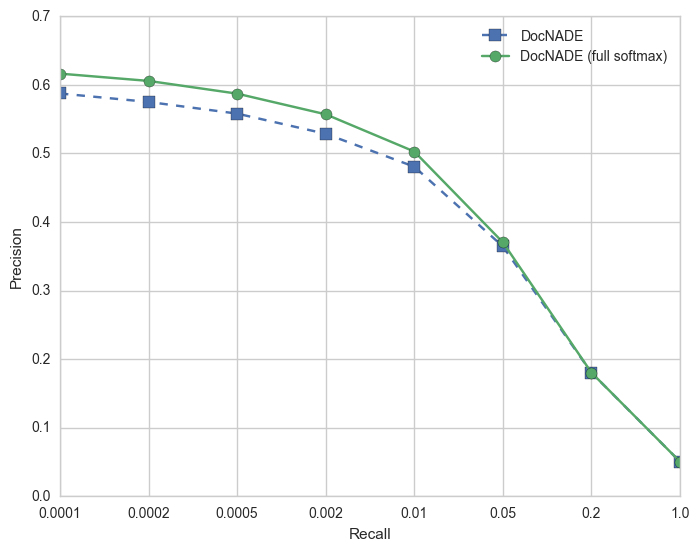

For the retrieval evaluation, we first create vectors for every document in the dataset. We then use the held-out test set vectors as “queries”, and for each query we find the closest N documents in the training set (by cosine similarity). We then measure what percentage of these retrieved training documents have the same newsgroup label as the query document. We then plot a curve of the retrieval performance for different values of N. Note: for working with larger vocabularies, the current implementation supports approximating the softmax using the sampled softmax.

Conclusion

We took another look a DocNADE, noting that it can be viewed as another way to train word embeddings. We also highlighted the potential for large performance boosts with older models due simply to modern computational improvements - in this case because it is no longer necessary to approximate smaller vocabularies. The full source code for the model is available on Github.Related Content

-

General

General20 Aug, 2024

The advantage of monitoring long tail international sources for operational risk

Keith Doyle

4 Min Read

-

General

General16 Feb, 2024

Why AI-powered news data is a crucial component for GRC platforms

Ross Hamer

4 Min Read

-

General

General24 Oct, 2023

Introducing Quantexa News Intelligence

Ross Hamer

5 Min Read

Stay Informed

From time to time, we would like to contact you about our products and services via email.